When starting your career in data analytics or data science, you’ll find a lot of companies rely on many sources of data. Sometimes the data sources are stored in different formats or technologies. For example, the company I work for uses both SQL and NoSQL solutions making it difficult to join the raw data together.

Using data transformation techniques, it’s possible to bring the data out of their silos, reformat them and store them in a central location called a data warehouse.

In this data transformation tutorial, we will simulate working with SQL and NoSQL data by walking through the steps of transforming JSON data into tabular data in SQL Server. By the end of this article you’ll have learned the following about data transformation:

- What is data transformation?

- How does data transformation work?

- How to perform a data transformation

- The benefits of data transformation

- The challenges of data transformation

- Summary

Feel free to use the clickable menu to skip ahead to any section. With that said, let’s get started!

1. What is data transformation?

At a high level, data transformation is the operations by which source data are formatted or reshaped to fit the constraints of downstream systems or processes. Data transformation is often considered a data engineering or data governance task, typically occurring in extract, transform, load or extract, load, transform (ETL/ELT) pipelines designed to process raw data.

These data transformation processes take extracted source data and add to it, delete from it, or format it before storing it. In large scale systems, data transformation is usually automated by software used for creating data warehouses and data lakes. However, on smaller scales, data analysts and data scientists oftentimes will need to perform data transformations manually so they can model the data to assist with data-driven decision making.

An explosion in the Internet of Things (IoT) or “smart” devices has resulted in an age of big data. The massive increase in data means it is more important than ever to efficiently process and store data in ways that make it easy to analyze. By creating pipelines and processes to transform their data, organizations ensure they’re able to extract insights.

To help illustrate data transformation in the ETL/ELT processes, we’re going to work through an example. Imagine you’re an analyst at a company that stores structured data via rows and columns in one database and unstructured data via JSON in another database.

In order to perform an analysis, you need to format the unstructured data so it can be joined with the structured data. Whether your process is based around ETL or ELT concepts, the transformation needs to take place so the raw, unstructured data ends up being compatible with the structured data.

Next, let’s look at how data transformation works.

2. How does data transformation work?

Data transformation is a process that involves understanding the data, mapping the data to a destination system, and running the processes to perform the transformation. Before performing data transformation, pre-processing the data might be required. Preprocessing data includes tasks like de-duplicating records, managing outliers, and imputing missing values. Preprocessing functions can be included in a data transformation pipeline.

The data transformation process

To better understand how data transformation works, let’s review the data transformation process by breaking it down into four steps:

- Discovering

- Planning

- Performing

- Reviewing

We’ll go over each step in more detail now.

1. Discovering variables in the source data

Through data discovery, you need to identify variables of interest within the source data and figure out what pre-processing actions need to be performed to facilitate the data transformation.

2. Planning the data transformation

To map the source data to its landing system, you need to determine the structure it needs to be in. In our example, we’ll convert our JSON data to a tabular format of rows and columns. In addition to structure, in this step you’ll decide whether fields need to be renamed, dropped, or aggregated.

3. Performing the data transformation

Several tools or programming languages can be used to perform the data transformation. For example, Microsoft Excel remains one of the most popular tools in data analytics and has several functions and features that can transform data within the spreadsheet. When working with big data, Python and SQL are popular programming languages for data transformation. In our example, we’ll use SQL.

Related watching: What are the key languages data analysts use?

4. Reviewing the data transformation

Once the data transformation has occurred, evaluate it to make sure the results are as expected. For example, use tools to count records or verify duplicates have been removed, or that data aggregations have been performed correctly.

Now that you know all of the steps involved in data transformation, let’s get on with a short tutorial!

3. How to perform a data transformation: An example using SQL

Now that we’ve reviewed how to transform data using the 4-step process, let’s apply the steps using real data, transforming JSON data into tabular data using SQL. Databases relying on SQL have remained some of the most important tools for data management over the last 40 years, so understanding SQL is very important in the field of data analytics.

In this example, I’ll be using Microsoft SQL Server and SQL Server Management Studio. Both can be downloaded for free. If you’re new to SQL, check out this beginner tutorial .

Step 1: Create the source JSON data

JavaScript Object Notation (JSON) is one of the most popular data interchange formats, so there is a good chance you’ll encounter it when working with data and APIs. The JSON format supports hierarchical data structures, making use of objects and arrays. An object is a key/value pair contained within curly brackets {}. For example:

{“key1” : “value1”, “key2”:”value2″}

An array is a list of values contained within brackets []:

[“value1”, “value2”, “value3”]

To keep things simple, we’ll save some JSON data within the same database as our destination table. In the real world, the JSON data could be in a different database or streaming from a completely different system.

Create a database named dataTransformation using the following code:

create database dataTransformationCreate a new table that stores the source JSON data named sourceJSONTable.

use dataTransformation

create table sourceJSONTable (

[jsonID] [int] PRIMARY KEY CLUSTERED IDENTITY(1,1) NOT NULL,

[jsonData] nvarchar(MAX) NOT NULL,

) ON [PRIMARY]Then, populate sourceJSONTable using the following INSERT script:

insert into sourceJSONTable(jsonData)

select '{

"country":"United States",

"states":["California", "Minnesota", "Washington"],

"cities":[

{"CA":"San Diego"},

{"MN":"Minneapolis"},

{"WA":"Seattle"}

]

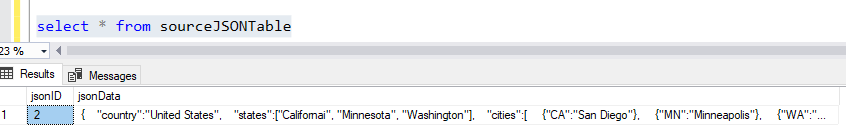

}'Next, you’ll verify the JSON data has been inserted into the sourceJSONTable.

Step 2. Create the destination table

Now that we’ve got JSON data in sourceJSONTable, let’s create our destination table. After creating the table, we’ll go through the four steps for how to transform data (discovering, planning, performing, reviewing).

Create the destination table using the following INSERT script:

use dataTransformation

create table destinationTable (

[tableID] [int] PRIMARY KEY CLUSTERED IDENTITY(1,1) NOT NULL,

[country] nvarchar(50),

[state] nvarchar(50),

[abbreviation] varchar(3),

[city] nvarchar(50)

)Step 3. Discover data

In the first step of data transformation, we inspect our source data to identify the variables of interest. Comparing the source data to the destination table, we see our variables of interest are country, state, abbreviation, and city. Looking at the JSON data, we can see our variables of interest are stored in key:value pairs, along with a nested JSON array.

Step 4. Plan data transformation

In the discovery step we identified our variables of interest, now let’s put together a plan to transform the data. We need to pull out each object in the JSON and insert it into a separate column in the destination table.

This is a data transformation technique called flattening since we’re transforming the hierarchical JSON data into a non-hierarchical structure. SQL Server has a function called OPENJSON that can be used to flatten JSON. A real data transformation plan might look something like this:

| Requirement # | Business Logic |

|---|---|

| 1 | Insert data from sourceJSONTable into destinationTable |

| 2 | Select the following objects:

|

| 3 | Flatten JSON using OPENJSON functionality |

| 4 | De-duplicate records leaving only distinct records |

Step 5. Perform the data transformation

We can write a simple SQL script that utilizes the OPENJSON function in SQL Server to perform the data transformation. The OPENJSON function flattens each of the JSON hierarchies in our source data.

insert into destinationTable(country, [state], abbreviation, city)

select country,

t.value as [state],

c.[key] as abbreviation,

c.value as city

from sourceJSONTable st

cross apply OPENJSON(st.jsonData)

with (country varchar(20), states nvarchar(max) as JSON, cities nvarchar(max) as JSON) s

cross apply OPENJSON(s.states) t

cross apply OPENJSON(s.cities) a

cross apply openJSON(a.value) c

where (t.value = 'Minnesota' and c.[key] = 'MN')

or (t.value = 'California' and c.[key] = 'CA')

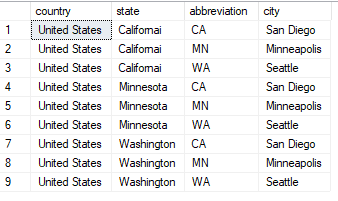

or (t.value = 'Washington' and c.[key] = 'WA')Notice the WHERE clause filters the data based on the state and abbreviation relationship. That’s because the cross apply causes the records to join all the other records in a many-to- many relationship, duplicating our state data. For example, without filtering in the WHERE clause, our data would look like this:

We would be inserting a bunch of incorrect data into the destination table if we didn’t include filtering in our data transformation. Filtering, removing duplicates, joining data, or feature engineering can all be a part of performing the data transformation before loading it into storage.

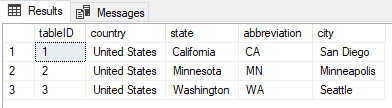

6. Review the data transformation

After executing the INSERT script, select data from the destination table to verify the records look correct.

select * from destinationTable

As expected, we see our three rows in the destination table. And there you have it—that’s how to transform data using the 4-step process we outlined earlier: discovering, planning, performing, reviewing.

4. Benefits of data transformation

The biggest benefit of transforming data is that it makes data easier to work with by improving consistency and data quality. In general, data plays an important role in organizations, allowing them to understand their users, audit financials, revise internal processes, identify trends, and make forecasts and predictions.

Even with all of their data, unless they utilize data transformations to join, aggregate, consolidate, or enrich their data, gaining insight from it could be impossible. Consider the following ways data transformation benefits organizations:

Improved data quality

The data transformation pipeline cleans up things like duplicates, null values, and outliers. We also make sure the structure of the data is transformed to match the destination system so it can be queried and accessed the same way. Beyond cleaning and restructuring, data transformation can also include aggregating and feature engineering. Ultimately, improving data quality through data transformation makes modeling the data much easier.

Better data accessibility

Transforming data so it can be stored in the same place overcomes one of the biggest obstacles in data analytics: Breaking down data silos. Organizations tend to keep data in silos. For example, customer data could be in one database while product event logs are in another, and sales data in yet another. Data transformation makes it possible to store all the records in one place, in the same format.

Simpler data governance

Since data can be generated from many sources and stored in many silos, managing data can be very challenging. Data transformation can be used to generate metadata to help organizations keep track of which data are sensitive and need to be regulated. Good metadata makes data easier to manage.

5. Challenges of data transformation

There are many challenges that come with trying to transform data. Working with big data can be very resource intensive and expensive because it takes a lot of processing power and computation to transform billions of records. Data transformation also requires both a lot of domain knowledge, and a lot of expertise with the underlying technologies used in the ETL/ELT pipelines.

Compute-resource intensive

Big data means powerful resources are required to transform it. If you don’t have powerful hardware handling the data transformation pipeline, the systems can run out of memory or be too inefficient to keep up with all of the data. For example, I was running a data transformation on millions of records, joining data from one table with another.

The server I was using didn’t have enough RAM to complete the operation, so my process kept running into Out Of Memory errors. When these types of errors happen, it takes a lot of time and effort to re-plan and retry the data transformation.

Expensive

Data transformation can be an expensive endeavor since it can require a lot of storage and a lot of expertise. ETL/ELT pipelines need to store the transformed data so it can be used for analysis. That means an organization needs a data warehouse in addition to the databases that store the raw data.

Beyond the costs of additional storage, Data engineers, analysts, and data scientists are well-paying, in-demand jobs. Organizations might not be able to afford many of them, leaving a small team with the burden of managing big data operations.

Requires domain knowledge

As an experienced product analyst working in education technology for the past 10 years, I’m all too familiar with the challenges of transforming education data. For example, there are multiple calculations used to aggregate attendance data, or generate GPAs, or score standardized tests.

Without the domain knowledge, data transformation can result in errors and inconsistencies that lead to bad analysis and incorrect predictions. It can take a lot of time and effort to develop the domain knowledge necessary for effective data transformation.

6. Summary

As organizations capture bigger data from more and more sources, efficiently transforming data as a part of an ETL/ELT pipeline becomes necessary for data-driven decision making. Data transformation is the process by which raw data is made to work with downstream processes and is done in four steps: discovering, planning, performing, reviewing.

There are many benefits to transforming data, such as improving the data quality, enabling and empowering data analytics and data modeling processes, and improving data governance practices. Although data transformation improves an organization’s ability to make data-driven decisions, it can be very challenging to transform big data. Working with big data requires powerful computer hardware, lots of storage space, and expert-level domain knowledge.

Regardless of the challenges, data transformation remains an important aspect of data management, and it helps organizations get the most from their data. If this guide and tutorial to data transformation has piqued your interest in the field of data analytics, why not try out our free, 5-day introductory data analytics short course?

You might also be interested in the following topics: