Data analytics is all about looking at various factors to see how they impact certain situations and outcomes. When dealing with data that contains more than two variables, you’ll use multivariate analysis.

Multivariate analysis isn’t just one specific method—rather, it encompasses a whole range of statistical techniques. These techniques allow you to gain a deeper understanding of your data in relation to specific business or real-world scenarios.

So, if you’re an aspiring data analyst or data scientist, multivariate analysis is an important concept to get to grips with.

In this post, we’ll provide a complete introduction to multivariate analysis. We’ll delve deeper into defining what multivariate analysis actually is, and we’ll introduce some key techniques you can use when analyzing your data. We’ll also give some examples of multivariate analysis in action.

Want to skip ahead to a particular section? Just use the clickable menu.

- What is multivariate analysis?

- Multivariate data analysis techniques (with examples)

- What are the advantages of multivariate analysis?

- Key takeaways and further reading

Ready to demystify multivariate analysis? Let’s do it.

1. What is multivariate analysis?

In data analytics, we look at different variables (or factors) and how they might impact certain situations or outcomes.

For example, in marketing, you might look at how the variable “money spent on advertising” impacts the variable “number of sales.” In the healthcare sector, you might want to explore whether there’s a correlation between “weekly hours of exercise” and “cholesterol level.” This helps us to understand why certain outcomes occur, which in turn allows us to make informed predictions and decisions for the future.

There are three categories of analysis to be aware of:

- Univariate analysis, which looks at just one variable

- Bivariate analysis, which analyzes two variables

- Multivariate analysis, which looks at more than two variables

As you can see, multivariate analysis encompasses all statistical techniques that are used to analyze more than two variables at once. The aim is to find patterns and correlations between several variables simultaneously—allowing for a much deeper, more complex understanding of a given scenario than you’ll get with bivariate analysis.

An example of multivariate analysis

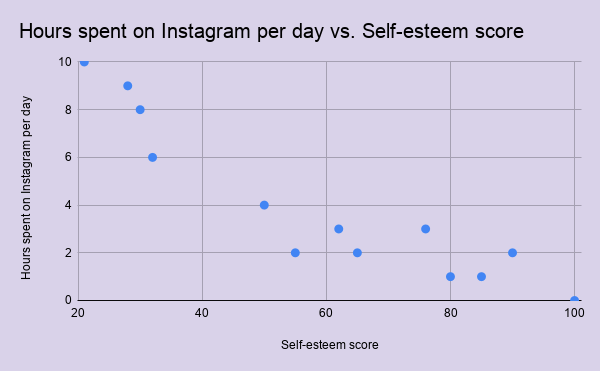

Let’s imagine you’re interested in the relationship between a person’s social media habits and their self-esteem. You could carry out a bivariate analysis, comparing the following two variables:

- How many hours a day a person spends on Instagram

- Their self-esteem score (measured using a self-esteem scale)

You may or may not find a relationship between the two variables; however, you know that, in reality, self-esteem is a complex concept. It’s likely impacted by many different factors—not just how many hours a person spends on Instagram. You might also want to consider factors such as age, employment status, how often a person exercises, and relationship status (for example). In order to deduce the extent to which each of these variables correlates with self-esteem, and with each other, you’d need to run a multivariate analysis.

So we know that multivariate analysis is used when you want to explore more than two variables at once. Now let’s consider some of the different techniques you might use to do this.

2. Multivariate data analysis techniques and examples

There are many different techniques for multivariate analysis, and they can be divided into two categories:

- Dependence techniques

- Interdependence techniques

So what’s the difference? Let’s take a look.

Multivariate analysis techniques: Dependence vs. interdependence

When we use the terms “dependence” and “interdependence,” we’re referring to different types of relationships within the data. To give a brief explanation:

Dependence methods

Dependence methods are used when one or some of the variables are dependent on others. Dependence looks at cause and effect; in other words, can the values of two or more independent variables be used to explain, describe, or predict the value of another, dependent variable? To give a simple example, the dependent variable of “weight” might be predicted by independent variables such as “height” and “age.”

In machine learning, dependence techniques are used to build predictive models. The analyst enters input data into the model, specifying which variables are independent and which ones are dependent—in other words, which variables they want the model to predict, and which variables they want the model to use to make those predictions.

Interdependence methods

Interdependence methods are used to understand the structural makeup and underlying patterns within a dataset. In this case, no variables are dependent on others, so you’re not looking for causal relationships. Rather, interdependence methods seek to give meaning to a set of variables or to group them together in meaningful ways.

So: One is about the effect of certain variables on others, while the other is all about the structure of the dataset.

With that in mind, let’s consider some useful multivariate analysis techniques. We’ll look at:

- Multiple linear regression

- Multiple logistic regression

- Multivariate analysis of variance (MANOVA)

- Factor analysis

- Cluster analysis

Multiple linear regression

Multiple linear regression is a dependence method which looks at the relationship between one dependent variable and two or more independent variables. A multiple regression model will tell you the extent to which each independent variable has a linear relationship with the dependent variable. This is useful as it helps you to understand which factors are likely to influence a certain outcome, allowing you to estimate future outcomes.

Example of multiple regression:

As a data analyst, you could use multiple regression to predict crop growth. In this example, crop growth is your dependent variable and you want to see how different factors affect it. Your independent variables could be rainfall, temperature, amount of sunlight, and amount of fertilizer added to the soil. A multiple regression model would show you the proportion of variance in crop growth that each independent variable accounts for.

Source: Public domain via Wikimedia Commons

Multiple logistic regression

Logistic regression analysis is used to calculate (and predict) the probability of a binary event occurring. A binary outcome is one where there are only two possible outcomes; either the event occurs (1) or it doesn’t (0). So, based on a set of independent variables, logistic regression can predict how likely it is that a certain scenario will arise. It is also used for classification. You can learn about the difference between regression and classification here.

Example of logistic regression:

Let’s imagine you work as an analyst within the insurance sector and you need to predict how likely it is that each potential customer will make a claim. You might enter a range of independent variables into your model, such as age, whether or not they have a serious health condition, their occupation, and so on. Using these variables, a logistic regression analysis will calculate the probability of the event (making a claim) occurring. Another oft-cited example is the filters used to classify email as “spam” or “not spam.” You’ll find a more detailed explanation in this complete guide to logistic regression.

Multivariate analysis of variance (MANOVA)

Multivariate analysis of variance (MANOVA) is used to measure the effect of multiple independent variables on two or more dependent variables. With MANOVA, it’s important to note that the independent variables are categorical, while the dependent variables are metric in nature. A categorical variable is a variable that belongs to a distinct category—for example, the variable “employment status” could be categorized into certain units, such as “employed full-time,” “employed part-time,” “unemployed,” and so on. A metric variable is measured quantitatively and takes on a numerical value.

In MANOVA analysis, you’re looking at various combinations of the independent variables to compare how they differ in their effects on the dependent variable.

Example of MANOVA:

Let’s imagine you work for an engineering company that is on a mission to build a super-fast, eco-friendly rocket. You could use MANOVA to measure the effect that various design combinations have on both the speed of the rocket and the amount of carbon dioxide it emits. In this scenario, your categorical independent variables could be:

- Engine type, categorized as E1, E2, or E3

- Material used for the rocket exterior, categorized as M1, M2, or M3

- Type of fuel used to power the rocket, categorized as F1, F2, or F3

Your metric dependent variables are speed in kilometers per hour, and carbon dioxide measured in parts per million. Using MANOVA, you’d test different combinations (e.g. E1, M1, and F1 vs. E1, M2, and F1, vs. E1, M3, and F1, and so on) to calculate the effect of all the independent variables. This should help you to find the optimal design solution for your rocket.

Factor analysis

Factor analysis is an interdependence technique which seeks to reduce the number of variables in a dataset. If you have too many variables, it can be difficult to find patterns in your data. At the same time, models created using datasets with too many variables are susceptible to overfitting. Overfitting is a modeling error that occurs when a model fits too closely and specifically to a certain dataset, making it less generalizable to future datasets, and thus potentially less accurate in the predictions it makes.

Factor analysis works by detecting sets of variables which correlate highly with each other. These variables may then be condensed into a single variable. Data analysts will often carry out factor analysis to prepare the data for subsequent analyses.

Factor analysis example:

Let’s imagine you have a dataset containing data pertaining to a person’s income, education level, and occupation. You might find a high degree of correlation among each of these variables, and thus reduce them to the single factor “socioeconomic status.” You might also have data on how happy they were with customer service, how much they like a certain product, and how likely they are to recommend the product to a friend. Each of these variables could be grouped into the single factor “customer satisfaction” (as long as they are found to correlate strongly with one another). Even though you’ve reduced several data points to just one factor, you’re not really losing any information—these factors adequately capture and represent the individual variables concerned. With your “streamlined” dataset, you’re now ready to carry out further analyses.

Cluster analysis

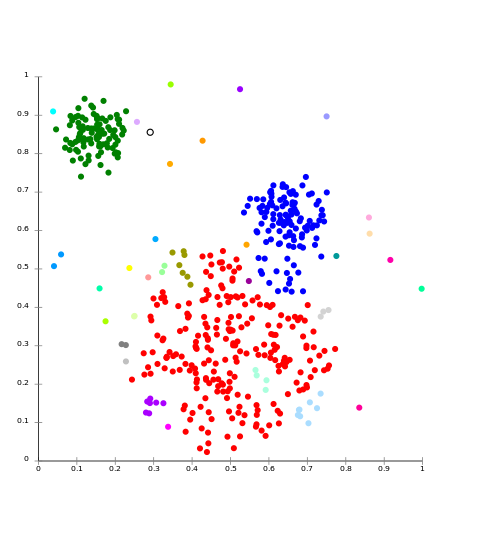

Another interdependence technique, cluster analysis is used to group similar items within a dataset into clusters.

When grouping data into clusters, the aim is for the variables in one cluster to be more similar to each other than they are to variables in other clusters. This is measured in terms of intracluster and intercluster distance. Intracluster distance looks at the distance between data points within one cluster. This should be small. Intercluster distance looks at the distance between data points in different clusters. This should ideally be large. Cluster analysis helps you to understand how data in your sample is distributed, and to find patterns.

Learn more:What is Cluster Analysis? A Complete Beginner’s Guide

Cluster analysis example:

A prime example of cluster analysis is audience segmentation. If you were working in marketing, you might use cluster analysis to define different customer groups which could benefit from more targeted campaigns. As a healthcare analyst, you might use cluster analysis to explore whether certain lifestyle factors or geographical locations are associated with higher or lower cases of certain illnesses. Because it’s an interdependence technique, cluster analysis is often carried out in the early stages of data analysis.

Source: Chire, CC BY-SA 3.0 via Wikimedia Commons

More multivariate analysis techniques

This is just a handful of multivariate analysis techniques used by data analysts and data scientists to understand complex datasets. If you’re keen to explore further, check out discriminant analysis, conjoint analysis, canonical correlation analysis, structural equation modeling, and multidimensional scaling.

3. What are the advantages of multivariate analysis?

The one major advantage of multivariate analysis is the depth of insight it provides. In exploring multiple variables, you’re painting a much more detailed picture of what’s occurring—and, as a result, the insights you uncover are much more applicable to the real world.

Remember our self-esteem example back in section one? We could carry out a bivariate analysis, looking at the relationship between self-esteem and just one other factor; and, if we found a strong correlation between the two variables, we might be inclined to conclude that this particular variable is a strong determinant of self-esteem. However, in reality, we know that self-esteem can’t be attributed to one single factor. It’s a complex concept; in order to create a model that we could really trust to be accurate, we’d need to take many more factors into account. That’s where multivariate analysis really shines; it allows us to analyze many different factors and get closer to the reality of a given situation.

4. Key takeaways and further reading

In this post, we’ve learned that multivariate analysis is used to analyze data containing more than two variables. To recap, here are some key takeaways:

- The aim of multivariate analysis is to find patterns and correlations between several variables simultaneously

- Multivariate analysis is especially useful for analyzing complex datasets, allowing you to gain a deeper understanding of your data and how it relates to real-world scenarios

- There are two types of multivariate analysis techniques: Dependence techniques, which look at cause-and-effect relationships between variables, and interdependence techniques, which explore the structure of a dataset

- Key multivariate analysis techniques include multiple linear regression, multiple logistic regression, MANOVA, factor analysis, and cluster analysis—to name just a few

So what now? For a hands-on introduction to data analytics, try this free five-day data analytics short course. And, if you’d like to learn more about the different methods used by data analysts, check out the following: