Predictive analytics is undoubtedly one of the most lauded tools in data science and machine learning. By identifying trends and patterns, predictive analytics helps forecast future events and can even fill in the gaps in datasets by automating otherwise time-consuming imputation tasks. One of the most common statistical techniques used in predictive analytics is linear regression.

Linear regression is useful as it allows us to model the relationship between one or more input variables and a dependent output variable. It’s also relatively easy to grasp and can be applied in many disciplines, from finance and marketing to medicine.

But how does linear regression work? What are its strengths and weaknesses? In this beginner’s guide, we’ll cover everything you need to know to get started with linear regression. To simplify things, we’ll use plain English, explaining any jargon as we go. Where necessary, we’ve also included links to some excellent free statistics resources that will explain the math clearly.

We’ll cover:

- How is linear regression performed?

- Why is linear regression important?

- What are the key assumptions of effective linear regression?

- How do you prepare data for performing linear regression?

- Examples of linear regression

- Preparing a linear regression model: 4 techniques

- Summary

Ready to get all the basics of linear regression down? Then let’s dive in.

1. How is linear regression performed?

We don’t want to presume any prior knowledge here, so before getting into how we can perform linear regression, let’s cover the basics.

What is linear regression?

In the simplest terms, a linear regression model allows us to see how one thing changes based on how other things change. It uses one or more independent input variables to predict a single, dependent output variable. This sounds technical. However, all it means is that the predicted value you are looking for (the output variable) depends on the values that you put into the model (the input variables).

When there is only one input variable we call this simple linear regression. If there are two or more inputs, this is multiple linear regression.

At first glance, the concept of linear regression may seem intimidating. However, it’s not that complex, and you may even recall studying it in school. At some point, you will likely have plotted two sets of data points against one another then drawn what’s known as a ‘line of best fit’ on top. You will then have used this line to predict values missing from your original dataset. In its most basic form, that’s all that linear regression is.

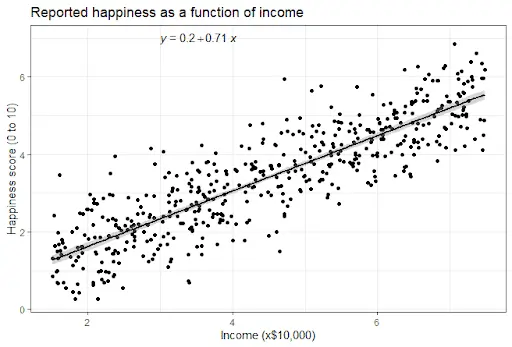

Source: scribbr.com

How does linear regression work?

Linear regression uses linear equations that we can plot onto a graph. While there are different linear equations for different constellations of variables, the simplest equation (with only one input variable) has the form:

Y = mX + b

Be aware that you may come across versions of this equation that use different symbols, or slightly different ordering (e.g. Y = a + bX) but they essentially have the same form.

In the case of our equation:

- Y describes the dependent variable you are trying to predict.

- X describes the input variable.

- m describes the slope of the line

- b describes the estimated intercept at the y-axis (the vertical axis on the graph)

Once again, you probably learned something about linear equations during algebra lessons as a kid. If you don’t remember, that’s okay—but it’s probably worth polishing up on the basics before we go any further!

What kinds of data does linear regression model?

You can use linear regression to model the relationship between various input and output variables. However, the values must always be quantitative, i.e. numeric. While you can use linear regression to model qualitative (or categorical) data, this involves first assigning numerical values to the data. This technique is known as dummy coding, which we’ll cover in more detail in section 4.

As in the graph shown, values used for linear regression could include a numerical measure of happiness vs income, or something else, like weight vs height. You can even use linear regression to try and predict how someone’s height affects how much they earn. However, this highlights an important limitation of linear regression—while plotting people’s heights against their salaries may seemingly indicate causation, that’s not necessarily the case. Common sense tells us that someone’s height is unlikely to impact how much they earn.

This illustrates one limitation of linear regression. Namely, it is useful for identifying the correlation between two variables but not necessarily causation. This is a very basic fact worth considering when carrying out any predictive analysis using linear regression models: common sense is always required.

Why is it called linear regression?

Just as a curiosity point, you might wonder: why is it called linear regression? While the name makes the technique sound more complex than it is, it is really just a simple descriptor. ‘Linear’ refers to the fact that you are measuring a linear relationship between two (or more) variables. Meanwhile, ‘regression’ refers to the fact that you are extrapolating output data by ‘going back’ or ‘regressing’ to see what originated those data. It makes sense once you know what it means!

2. Why is linear regression important?

Linear regression is important for several reasons. Firstly, it has pure statistical uses:

- Linear regression can help you to predict future outcomes or identify missing data.

- Linear regression can help you correct or spot likely errors in a dataset, identifying or estimating the correct values.

- Linear regression can help you measure the strength of the relationship between two sets of variables by identifying the R-square value (which identifies how much of the variation in the dependent variable is explained by the independent variable)

Beyond this, it also has a wide range of applications. Over the years, it has found uses in various disciplines, ranging from economics to the natural and social sciences. In short, it is very well understood by statisticians. This means data analysts have a clear and well-tested set of guidelines to help model data quickly and accurately.

In recent years, linear regression’s use in business has also grown, thanks to the incredible amounts of data businesses now have access to and advances in machine learning technology. This allows business leaders to make much more informed decisions, instead of relying on personal experience or their gut feeling.

To summarize, linear regression is important because it is:

- Accurate

- Easy to understand

- Has a wide range of applications

- Is far superior to business instinct

To be a successful data analyst, you can’t do without linear regression!

3. What are the key assumptions of effective linear regression?

At the end of the last section, we mentioned that linear regression generally produces accurate results, hence being a popular predictive analytics tool. However, it is not infallible. As with any statistical technique, we must apply some key background assumptions. Linear regression models only provide useful results if we make the following assumptions about the source data:

Linearity

Linearity assumes that the relationship between the dependent and independent variables is linear. This simply means that the dependent variable changes at a constant rate as the independent variable changes.

Multivariate normality

Multivariate normality assumes that all the residual values in a multiple regression model are normally distributed. This is important as it means that the model is not biased and that the results are reliable.

However, to fully grasp this, the terms need some unpicking!

We’ll start with the definition of ‘residuals’. In statistics, residual values describe the difference between an observed value of a variable and the value predicted by the model. When talking about residuals in terms of multivariate normality, we are referring only to this difference value, not to the independent variables themselves (a common mistake).

Meanwhile, ‘normally distributed’ means that the data are evenly shared, meaning that data points near the mean (or average) are more frequent than data far from the mean.

So when we say ‘all residuals are normally distributed’, this simply means that the difference between observed and predicted values is symmetrically shared and representative of the whole dataset, that is, the values are not skewed or distorted.

Homoscedasticity

Homoscedasticity is a big word for a relatively straightforward concept! It derives from Latin, means ‘same variance’, and simply refers to the random ‘noise’ in the relationship between independent and dependent variables. The assumption here is that the margin of error between these variables is consistent throughout a dataset. For example: as wealth increases, happiness rises at the same level. If we find, however, that as wealth increases, there is a drastically larger rise in happiness, when compared to lower wealth, we have what is known as heteroscedasticity. This is a problem since it can incorrectly weight a dataset, causing errors in your prediction model.

If this is still unclear, this explanation of the difference between linearity and homoscedasticity might be a valuable read.

Independence

Independence assumes that the independent variables in the model are not correlated or related to one another. This assumption is necessary for linear regression to be valid. It’s worth noting that it is impossible to determine independence by looking at the data alone. To ensure the variables aren’t related to one another, you’ll need to understand how the dataset was collected.

No multicollinearity

Multicollinearity is another mouthful of a word that is deceptively straightforward. It describes a statistical phenomenon where two or more predictors in a multiple regression model are highly-correlated to one another. The no multicollinearity assumption, therefore, states that there should be no relationship between the predictor variables in a multiple linear regression model. Why not? Because this can lead to an unstable measure of the relationship between predictor and output/response variables, making it hard to interpret the individual impact of each predictor on the output.

Now that you have a basic grasp of these assumptions, what are the practical steps you should take to ensure your data are ready for a linear regression model? Let’s find out.

4. How do you prepare data for performing linear regression?

Not surprisingly, a large part of preparing data for linear regression involves ensuring—as best you can—that the dataset fits the assumptions outlined in the previous section.

Because linear regression is so well-understood, there are many rules you can apply here. However, since all datasets are different and require different transformations, it’s best to use these as a guideline.

Nevertheless, some initial data-wrangling exercises you might want to consider include:

Dealing with missing data

If data is missing from your dataset you will need to decide what to do about it. You can either impute (or infer) the values, or you can remove the offending columns/rows altogether.

Removing corrupt or noisy data

To keep your results as accurate as possible, you will need to remove any variables that aren’t required for the linear regression. Noisy variables will clutter the model and make it harder to interpret the results. You should identify and remove outliers or any other obviously erroneous data.

Dummy coding categorical data

Another step is to dummy code any categorical variables. Dummy coding involves creating new columns for each category and assigning a ‘0’ or a ‘1’ to show whether the observation falls into that category or not. The purpose of dummy coding is to transform non-linear data into a form that a linear regression model can use.

Standardizing the data

Finally, you’ll need to standardize the data. This means ensuring all measurement units are identical, e.g. all heights and weights are in metric, all currency values are the same, and so on. Standardizing also involves rescaling the data (meaning that the data points can be compared like-for-like on the same scale). You can achieve this by ensuring that the mean is 0 and the standard deviation is 1.

To learn more about these basic data transformations, check out this complete guide to data cleaning.

Once you’ve completed these tasks, you’ll also have to check that your dataset meets your key assumptions in section 3. Let’s take a look at these next.

Check the linearity assumption

You can check the linearity assumption of your dataset by using a scatterplot. If the relationship between the variables is linear, the plot will form a straight line. If there is no linear relationship, it will not, and a non-linear approach will be more suitable.

Check the normality assumption

You can check the normality assumption using a histogram or normal probability plot. If the residuals (as defined in the previous section) are normally distributed, the histogram will be bell-shaped and the normal probability plot will be linear.

Check the homoscedasticity assumption

There are several ways to check for homoscedasticity, but a popular method is, again, simply to plot the data. If the data are homoscedastic, they will be evenly distributed along the plot. If the data are heteroscedastic, they will usually be clustered in one area or spread out in a non-uniform way.

Check for multicollinearity

The simplest way to check the dataset for multicollinearity is to look at its correlation matrix in MS Excel. If there are any variables with a correlation coefficient greater than 0.7 there may be multicollinearity. You can then test potential predictor variables by running a regression analysis. If the R-squared value (mentioned in section 2) is close to 1 then you have multicollinearity and will need to remove these data points.

Next up, how is linear regression used in the real world?

5. Examples of linear regression

It’s good to explore linear regression in theory, but it also helps to understand how it applies in real life. As one of the widest-used algorithms in data analytics, let’s look at how linear regression is applied in three key sectors.

Linear regression in healthcare

In medicine, linear regression applies to predictive tasks ranging from how patients will react to a new medication to medical research. It’s also commonly used to predict a metric known as patient length of stay (LOS). Using data on previous lengths of stay, diagnoses, illnesses, diet, age, and even geographic location, linear regression can predict a patient’s future LOS. This has numerous benefits.

First, it helps identify inefficiencies in the healthcare system. Second, it is a powerful quality metric (a shorter LOS generally means patients are getting the care they need). Finally, it’s a good financial measure as a longer LOS is typically more expensive. All these insights can be used to devise new strategies that reduce costs.

You can learn more about the relationship between data analytics and healthcare in this article.

Linear regression in finance

Finance is possibly the business area where linear regression is most commonly applied. That’s because it can forecast future events and automate tasks that would otherwise take analytics teams a lot of time. For instance, we often use linear regression for time series analysis. This involves analyzing data points spaced at regular intervals (for example, daily stock market data or annual shifts in the value of precious metals). Modeling these data can help predict future values like interest or exchange rates. We can also use linear regression to calculate risk in the insurance sector.

Linear regression in retail

Retail is another area where linear regression is commonly applied. A linear regression model can help forecast future product sales, predict stock replenishment needs, and even individual customer behavior. In the latter case, a retailer might use linear regression to forecast how much a customer is likely to spend in-store, or online, based on their age, gender, and location, among other factors. A linear regression algorithm can help retailers to predict spending with a high degree of accuracy. This allows them to improve their marketing strategies, tailoring messaging and customer experiences to target these individuals with products they are more likely to buy.

There are many other uses for linear regression, of course, but this highlights just how versatile the model can be. If you’re ready to dive in a bit deeper with some practical examples, check out this tutorial for linear regression in Excel and Python.

6. Preparing a linear regression model: Four techniques

In this section, we’ll briefly describe four techniques for preparing a linear regression model. These are common in machine learning. As a beginner, you might not need to know about them in detail but it’s worth being aware of them.

Since these techniques involve slightly more sophisticated theory, we’ll keep it high-level and direct you to more detailed explanations to learn more.

Simple linear regression

As described earlier, simple linear regression allows you to summarize and study the relationship between two continuous variables—a single independent input variable and a single dependent output variable. Examples include those outlined in section 1, such as the relationship between income and happiness, or that between height and weight. While simple linear regression is the easiest model to grasp, it has limitations. Namely, most real-world datasets don’t just have just one input variable but several. In these cases, you’re more likely to use multiple linear regression techniques (such as those described below).

Learn more: Read more about simple linear regression.

Ordinary least squares

Ordinary least squares (also known as linear least squares) is a technique used to predict unknown parameters for both simple and multiple linear regression. It aims to minimize the difference between the residuals, i.e. the observed data and the outputs predicted by the model. A smaller difference between these two values equates to more accurate predictions since the model better fits the data.

Learn more: Read more about ordinary least squares.

Gradient descent

Gradient descent (also known as steepest descent) is an optimization algorithm. We use it to find the values of variables that minimize a cost function. A cost function, for clarity, is the measure of how far off a model’s predictions are from the actual values. By minimizing these, you can ensure that the predictions are as close to the actual values as possible, improving the accuracy of your model. Gradient descent is widely used in machine learning as it is very useful for large datasets.

Learn more: Read more about gradient descent.

Regularization

In linear regression, regularization is a technique used to avoid overfitting. Overfitting describes when a model fits training data too closely and therefore does not generalize well to new data. Regularization solves this by adding a penalty to the loss function (the loss function maps the values of one or more variables onto a real number) that is proportional to the magnitude of the coefficients (the number of each variable). This penalty discourages the coefficients from becoming too large, and therefore reduces overfitting.

Learn more: Read more about regularization.

Summary

In this beginner’s introduction, we’ve explored everything you need to know about linear regression. We’ve learned that:

- Linear regression is a statistical technique commonly used in predictive analytics. It uses one or more known input variables to predict an unknown output variable.

- Generally speaking, linear regression is highly accurate, easy to understand, and has a wide range of business applications.

- As with any statistical technique, successful linear regression means making certain assumptions about a dataset’s features.

- In addition to general data cleaning, preparing a dataset for linear regression involves transforming it to meet these assumptions as closely as possible.

- Linear regression is widely used in business areas like healthcare, finance, and retail, as well as in economics and the natural and social sciences.

- Linear regression techniques include simple linear regression, ordinary least squares, gradient descent, and regularization.

While this covers the basics, there’s so much more to learn about linear regression and predictive analytics! To learn more about data analytics in general, why not start by signing up for this free, 5-day, data analytics short course? Alternatively, to learn more about specific data analytics topics, be sure to check out the following introductory articles: